Apache Flume Tutorial for Beginners TechVidvan

Get to Know Apache Flume from Scratch! Analytics Vidhya

Apache Flume i About the Tutorial Flume is a standard, simple, robust, flexible, and extensible tool for data ingestion from various data producers (webservers) into Hadoop. In this tutorial, we will be using simple and illustrative example to explain the basics of Apache Flume and how to use it in practice. Audience

Apache Flume Tutorial Twitter Data Streaming Using Flume Hadoop T…

Flume is a standard, simple, robust, flexible, and extensible tool for data ingestion from various data producers (webservers) into Hadoop. In this tutorial, we will be using simple and illustrative example to explain the basics of Apache Flume and how to use it in practice. Audience

Apache Flume Tutorial for Beginners TechVidvan

Introduction Apache Flume Introduction Unboxing Big Data 4.23K subscribers Subscribe 5.1K views 2 years ago Apache Flume The video provides you the Apache Flume Tutorial. It explains.

Apache Flume Tutorial Apache Hadoop Tutorial Flume Demo

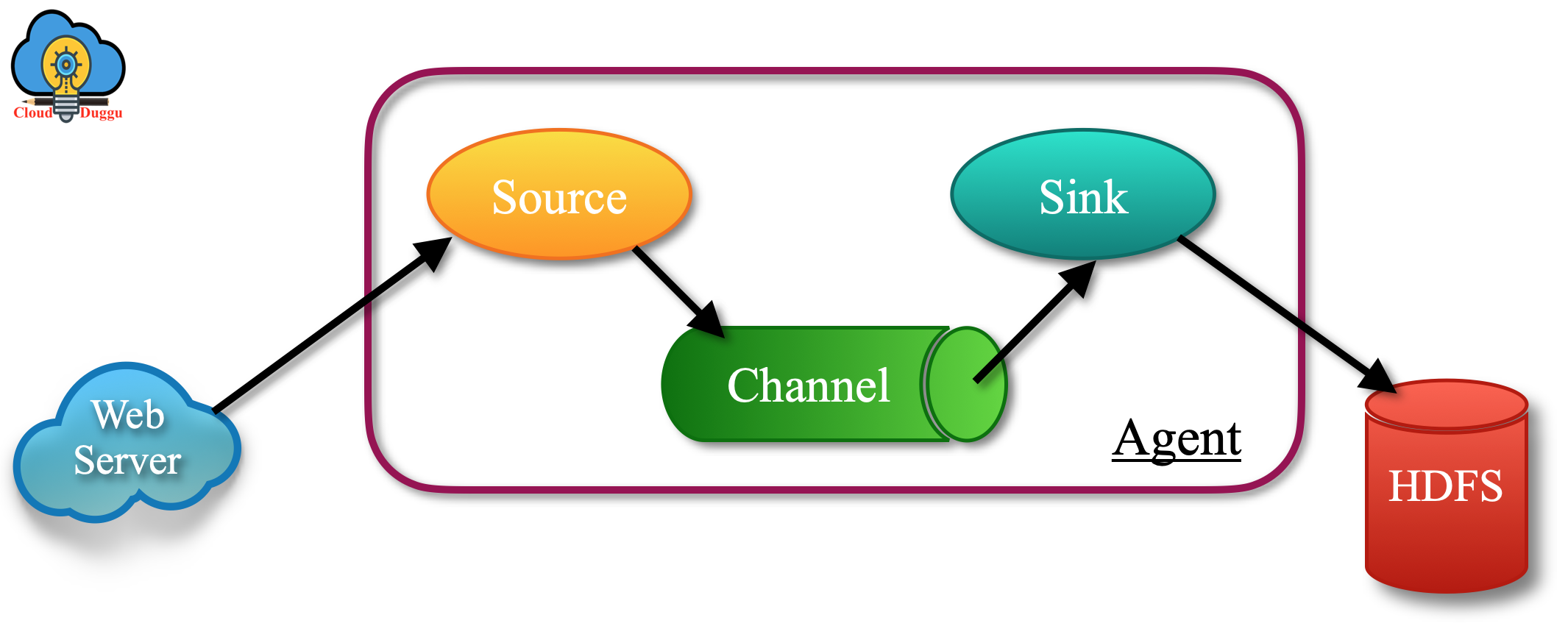

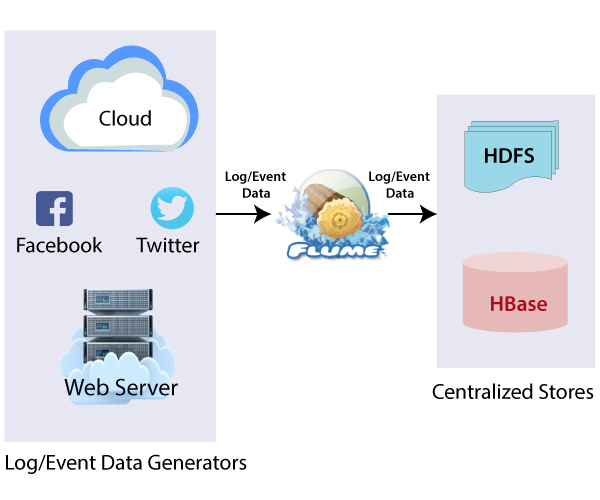

Apache Flume is a distributed, reliable, and available system for efficiently collecting, aggregating and moving large amounts of log data from many different sources to a centralized data store. The use of Apache Flume is not only restricted to log data aggregation. Since data sources are customizable, Flume can be used to transport massive.

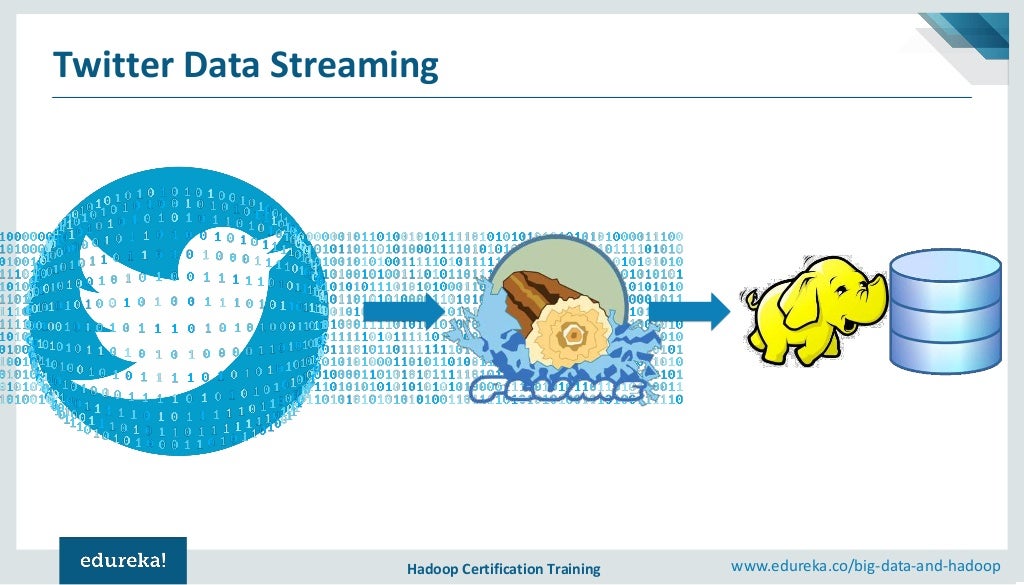

Apache Flume Tutorial Twitter Data Streaming Using Flume Hadoop T…

Learn Apache Airflow online at your own pace. Start today and improve your skills. Join millions of learners from around the world already learning on Udemy.

Apache Flume Introduction YouTube

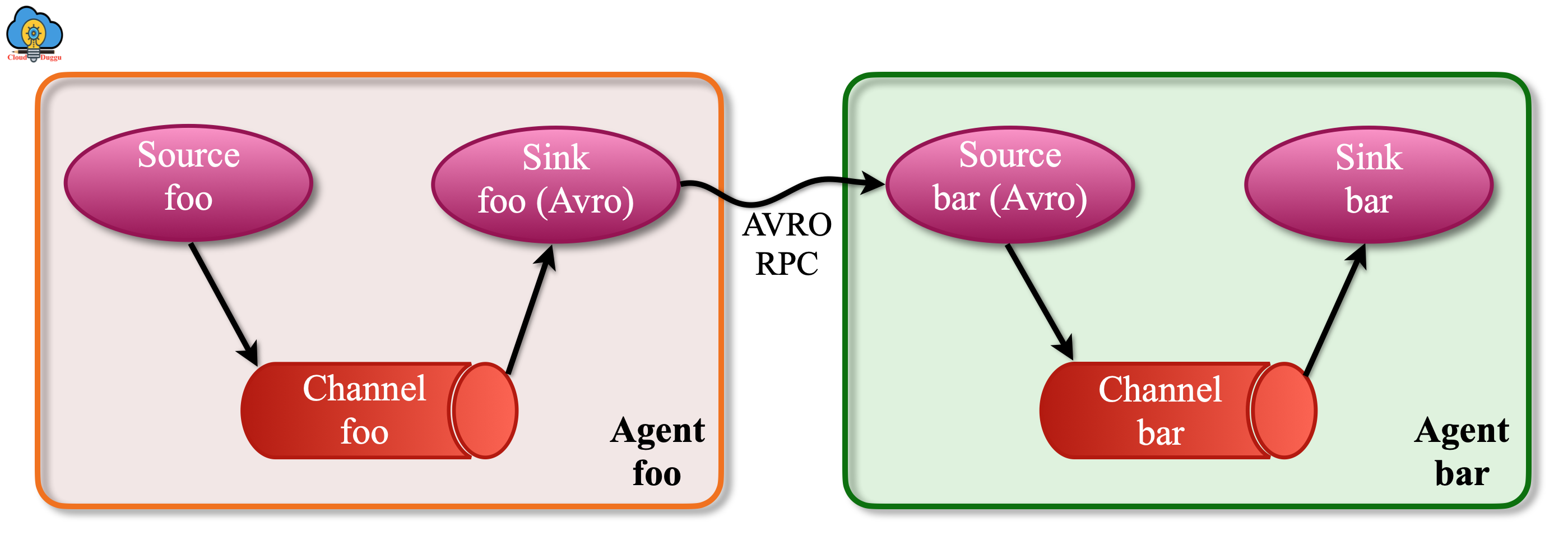

Hadoop Flume Tutorial Fundamentals of Apache Flume Apache Flume is an agent for data collection. It is generally used for log data. Flume takes data from several sources like Avro, Syslog's, and files and delivers to various destinations like Hadoop HDFS or HBase. Apache Flume is composed of 6 important components-

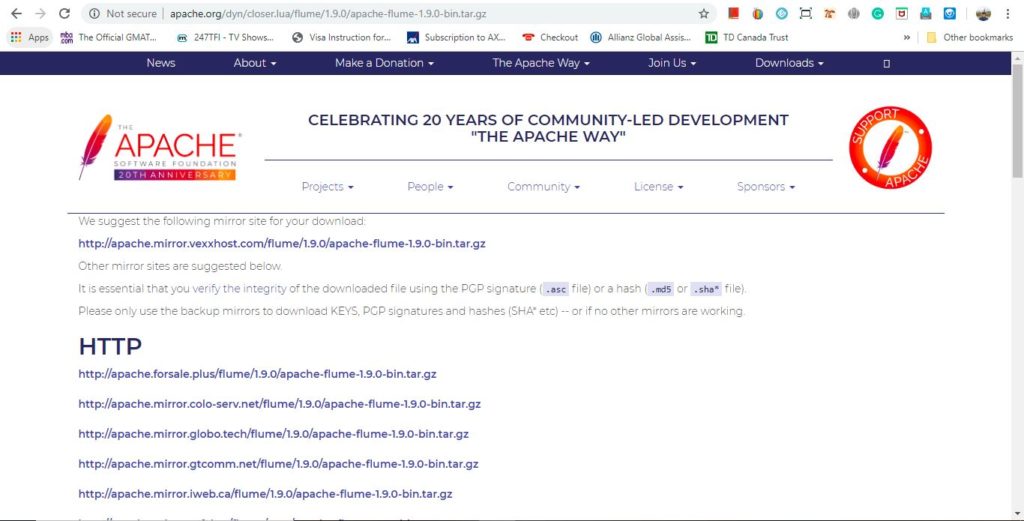

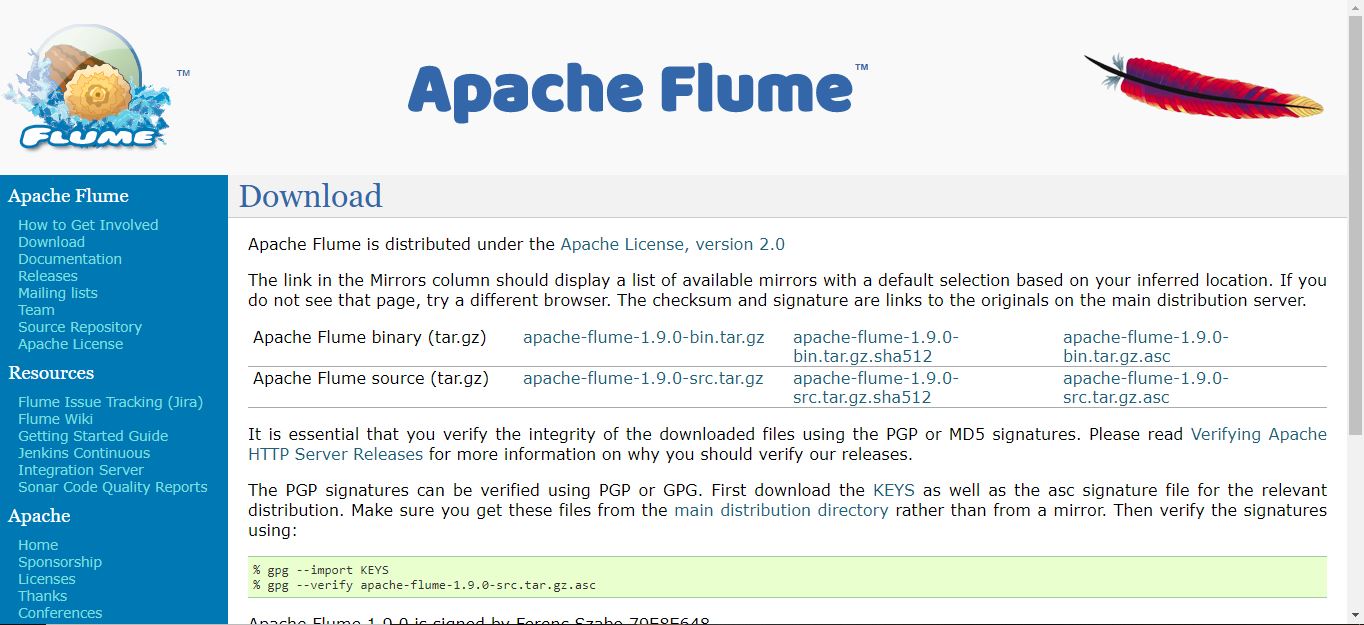

Easy Apache Flume Tutorial Download

519 Share 41K views 4 years ago Hadoop 2.0 Tutorial Videos 🔥 Edureka Hadoop Training: https://www.edureka.co/big-data-hadoo. This Edureka Flume tutorial will explain you the fundamentals.

Checkout VirajeTech World's Best ELearning Platform

Apache Flume is a reliable and distributed system for collecting, aggregating and moving massive quantities of log data. It has a simple yet flexible architecture based on streaming data flows. Apache Flume is used to collect log data present in log files from web servers and aggregating it into HDFS for analysis.

Data In Motion Migrating Apache Flume Flows to Apache NiFi Kafka

Step 5: Next, copy the downloaded tar file into any directory of your choice and extract contents by using the following command in order to untar the file. sudo tar -xvf apache-flume-1.9.-bin.tar.gz. UNTAR the tar file for Apache Flume. The above command will create a new directory with the name as 'apache-flume-1.9.-bin' and it will serve as an installation directory.

Tutorial 9 Apache Flume Tutorial Software Testing Class

Apache Flume: An Introduction. Apache Flume is an open-source distributed system originated at Cloudera and developed by the Apache Software Foundation. Designed for efficient data extraction, aggregation, and movement from various sources to a centralized storage or processing system, Flume is commonly used in big data environments.

Apache Flume Tutorial Twitter Data Streaming Using Flume Hadoop T…

1. It is an open-source framework. 2. It is a highly available, robust, and fault-tolerant service. 3. Apache Flume has tunable reliability mechanisms for fail-over and recovery. 4. It provides support for the complex data flows such as fan-in flows, multi-hop flows, fan-out flows.

Tutorial 9 Apache Flume Tutorial Software Testing Class

1 / 3 Blog from Data Loading Tools In this Apache Flume tutorial blog, we will understand how Flume helps in streaming data from various sources. But before that let us understand the importance of data ingestion. Data ingestion is the initial & important step in order to process & analyse data, and then derive business values out of it.

Apache Flume Tutorial Twitter Data Streaming Using Flume Hadoop T…

Apache Flume is an open-source tool for collecting, aggregating, and moving huge amounts of streaming data from the external web servers to the central store, say HDFS, HBase, etc. It is a highly available and reliable service which has tunable recovery mechanisms.

Apache Hadoop Ecosystem Tutorial CloudDuggu

Apache Flume Tutorial - Learn Apache Flume in simple and easy steps from basic to advanced concepts with clear examples including Introduction, Architecture, Other Components, Environment Setup, Data Flow, Flow Configuration, Channel Selectors, Sink Processors, Event Serializers, Interceptors, Tools

Apache Flume Tutorial for Beginners

Video On Apache Flume Tutorial from Video series of Introduction to Big Data and Hadoop. In this we will cover following topics:• What is Flume? | Introducti.

Apache Flume Data Flow Tutorial CloudDuggu

Apache Flume is a unique tool designed to copy log data or streaming data from various different web servers to HDFS. Apache Flume supports several sources as follows: 'Tail': The data is piped from the local files and is written into the HDFS via Flume. It is somewhat similar to a Unix command, 'tail'. System logs